Four years after Russia-linked groups stoked divisions in the U.S. presidential election on social media platforms, a new report shows that Moscow’s campaign hasn’t let up and has become harder to detect.

The report from University of Wisconsin-Madison professor Young Mie Kim found that Russia-linked social media accounts are posting about the same divisive issues — race relations, gun laws and immigration — as they did in 2016, when the Kremlin polluted American voters’ feeds with messages about the presidential election. Facebook has since removed the accounts.

Since then, however, the Russians have grown better at imitating U.S. campaigns and political fan pages online, said Kim, who analyzed thousands of posts. She studied more than 5 million Facebook ads during the 2016 election, identifying Russia’s fingerprints on some of the messages through an ad-tracking app. Her review is co-published by the Brennan Center for Justice, a law and policy institute, where she is a scholar.

The Russian improvements make it harder for voters and social media platforms to identify the foreign interference, Kim said.

“For normal users, it is too subtle to discern the differences,” Kim said. “By mimicking domestic actors, with similar logos (and) similar names, they are trying to avoid verification.”

Kim’s report comes weeks after U.S. intelligence officials briefed lawmakers on Russian efforts to stir chaos in American politics and undermine public confidence in this year’s election. The classified briefing detailed Russian efforts to boost the White House bids of both Republican President Donald Trump and Democratic Sen. Bernie Sanders.

Last month, FBI Director Christopher Wray warned that Russia was still waging “information warfare” with an army of fictional social media personas and bots that spread disinformation.

In a rare, joint statement Monday, the leaders of America’s intelligence agencies cautioned that foreign actors were spreading false information ahead of Super Tuesday to “cause confusion and create doubt in our system.”

But intelligence officials have not released any details about the type of disinformation or explained how Americans should protect themselves from it.

Russia has repeatedly denied interfering in the U.S. elections, and did again on Thursday.

“You just want us to repeat again that we have nothing to do with the U.S. elections,” Russia’s foreign ministry spokeswoman Maria Zakharova said.

Russia has refined its techniques since 2016 and new foreign actors have joined the game, making it harder to identify Kremlin-backed disinformation said Thomas Rid, a national security expert who has written a book about the Kremlin’s history of spreading disinformation.

“I do pick up some chatter that the visibility into Russian operations is not as good as it may appear,” Rid said. “It’s very difficult to spot Russian interference today.”

Still, it’s unclear how much impact — if any — Russian disinformation tactics have had on voters. Some of Russia’s social media ads in the 2016 U.S. presidential election were seen by only a handful of people and their impact has been “vastly overstated,” Rid said.

But Kim’s report pulls back the curtain on some of the online techniques Russia has already used in this year’s presidential race, including targeting battleground states with its divisive messaging.

Her review identified thousands of posts last year from more than 30 Instagram accounts, which Facebook removed from the site in October after concluding that they originated from Russia and had links to the Internet Research Agency, a Russian operation that targeted U.S. audiences in 2016. Facebook owns Instagram. Analysis from Graphika, a disinformation security firm, also concluded at the time that the accounts went to “great lengths to hide their origins.”

“We will keep evolving our defenses and announcing these influence campaigns, as we did more than 50 times last year,” Facebook said in an email.

After getting caught off-guard with Russia’s 2016 election interference attempts, Facebook, Google, Twitter and others put safeguards in place to prevent it from happening again. This includes taking down posts, groups and accounts that engage in “coordinated inauthentic behavior,” and strengthening verification procedures for political ads.

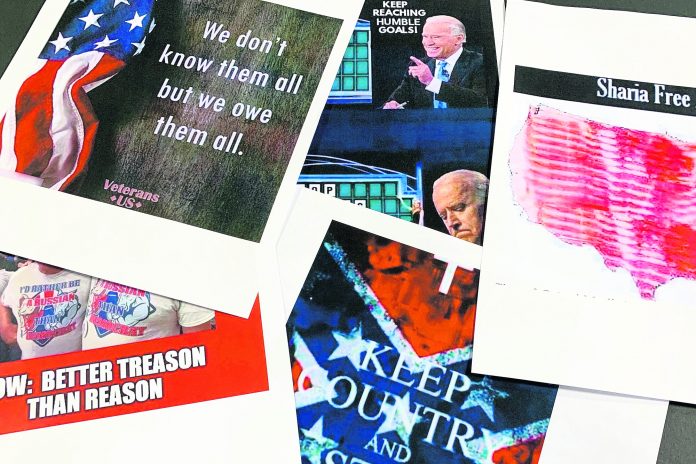

Kim’s analysis found the accounts appeared to mimic existing political ones, including one called “Bernie.2020_” that used campaign logos to make it seem like it was connected to Sanders’ campaign or was a fan page for his supporters, Kim said.

Some presidential candidates also were targeted directly.

An account called Stop.Trump2020 posted anti-Trump content. Other Instagram accounts pushed negative messages about Democrat Joe Biden.

“Like for Trump 2020,” said one meme featuring a portrait photo of Trump and a photo of Biden. “Ignore for Biden 2020.”

It was posted by an Instagram account called Iowa.Patriot, one of several accounts that targeted specific communities in crucial swing states like Michigan, Ohio and Iowa with messaging.

The accounts also appeared to capitalize on other divisive American issues that emerged after the 2016 election.

Some Instagram accounts pretended to be liberal, feminist groups as fallout from the #MeToo movement, which has exposed sexual misconduct allegations against high-profile public figures. Others targeted conservative women with posts that criticized abortions.

“I don’t need feminism, because real feminism is about equal opportunity and respect for women. NOT about abortions, free birth control ….” a meme on one account read.

The accounts varied in how often they posted, the size of their following and the traction the posts received. But they carried the hallmarks of a Russian-backed online disinformation campaign, Kim said.

“They’re clearly adapting to current affairs,” Kim said. “Targeting both sides with messages is very unique to Russia.”